728x90

반응형

SMALL

📄 사용 데이터셋 - Bus & Truck 객체 탐지

DataSet.zip

drive.google.com

- Bus&Truck DataSet

- fasterrcnn_resnet50_fpn 모델

- Dataset, Dataloader, Train/Val 클래스화

1. 가상환경 만들기

- 가상환경 설치

pip install pipenv- 가상환경에서 사용할 파이썬 버전 지정

pipenv --python 3.8- 가상환경 실행

pipenv shell- 가상환경을 관리하는 ipykernel 패키지 설치

pipenv install ipykernel- 주피터노트북에서 사용할 가상환경 이름 설정

python -m ipykernel install --user --display-name (주피터노트북에 표기할 커널이름) --name (가상환경 이름)

python -m ipykernel install --user --display-name yesung2 --name yesung22. 필요한 모듈 설치 및 불러오기

import os

import cv2

import torch

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

from collections import defaultdict

from ipywidgets import interact

from torch.utils.data import DataLoader

from torchvision import models, transforms

from torchvision.utils import make_grid

from torchvision.transforms import functional as F

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

from util import CLASS_NAME_TO_ID, visualize, save_model

import util

from torchvision.ops import nms

3. 파일정보 데이터프레임화 하기

data_dir = './DataSet/'

data_df = pd.read_csv(os.path.join(data_dir, 'df.csv'))

data_df- ImageID: 파일 이름(이미지 아이디)

- LabelName: 이미지 안에 있는 Label

- XMin, XMax, YMin, YMax: 바운딩 박스의 좌표

4. 이미지 데이터 확인하기

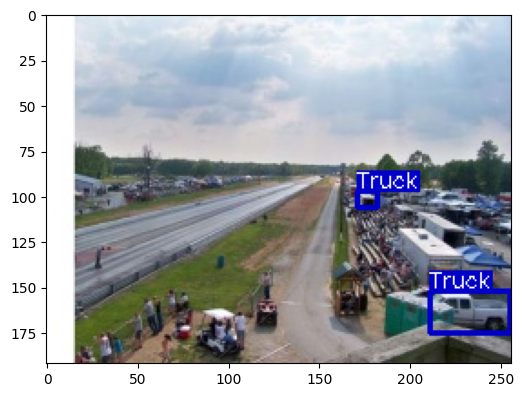

✅ 데이터셋의 0번 인덱스만 확인해보기

index = 0

image_files = [fn for fn in os.listdir('./DataSet/train/') if fn.endswith('jpg')] # 확장명이 jpg인 끝나는 파일들만 리스트에 담음

image_file = image_files[index]

image_file # 0번 인덱스 데이터

>>> '0000599864fd15b3.jpg'

# image_files의 전체 경로

image_path = os.path.join('./DataSet/train/', image_file)

image_path

>>> './DataSet/train/0000599864fd15b3.jpg'

# image의 shape

image = cv2.imread(image_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # h, w, c

image.shape

>>> (170, 256, 3)

plt.imshow(image)

5. util.py 생성

- 주로 다른 파이썬 스크립트나 프로젝트에서 공통적으로 사용되는 여러 가지 기능을 포함

- PyCharm으로 작성

import os

import cv2

import torch

import matplotlib.pyplot as plt

# 변수 설정

CLASS_NAME_TO_ID = {'Bus': 0, 'Truck': 1}

CLASS_ID_TO_NAME = {0: 'Bus', 1: 'Truck'}

BOX_COLOR = {'Bus': (200, 0, 0), 'Truck': (0, 0, 200)}

TEXT_COLOR = (255, 255, 255)

# 베스트 모델의 pt를 저장하는 함수

def save_model(model_state, model_name, save_dir='./trained_model'):

os.makedirs(save_dir, exist_ok=True) # 덮어씀

torch.save(model_state, os.path.join(save_dir, model_name))

# 바운딩박스를 그리는 함수

def visualize_bbox(image, bbox, class_name, color=BOX_COLOR, thickness=2):

x_center, y_center, w, h = bbox

x_min = int(x_center - w/2)

y_min = int(y_center - h/2)

x_max = int(x_center + w/2)

y_max = int(y_center + h/2)

cv2.rectangle(image, (x_min, y_min), (x_max, y_max), color=color[class_name], thickness=thickness)

# 텍스트가 들어갈 부분의 크기를 정함

((text_width, text_height), _) = cv2.getTextSize(

class_name,

cv2.FONT_HERSHEY_SIMPLEX,

0.4,

1

)

cv2.rectangle(image, (x_min, y_min - int(1.3 * text_height)),

(x_min + text_width, y_min), color[class_name], -1)

cv2.putText(image,

text=class_name,

org=(x_min, y_min - int(0.3 * text_height)), # 텍스트가 들어갈 위치

fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=0.4,

color=TEXT_COLOR)

return image

# 이미지 데이터를 시각화하는 함수

def visualize(image, bboxes, category_ids):

img = image.copy()

# image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

for bbox, class_id in zip(bboxes, category_ids):

# bbox의 좌표 정보

class_name = CLASS_ID_TO_NAME[class_id]

img = visualize_bbox(img, bbox, class_name)

return img6. 이미지 데이터 시각화 함수 생성

@interact(index=(0, len(image_files) -1))

def show_sample(index=0):

image_file = image_files[index]

image_path = os.path.join('./DataSet/train/', image_file)

image = cv2.imread(image_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# 파일 이름 알아오기

image_id = image_file.split('.')[0]

# 파일 이름에 대한 데이터프레임 정보 가져오기

meta_data = data_df[data_df['ImageID'] == image_id]

# 라벨 이름 가져오기

cate_names = meta_data['LabelName'].values

# 바운딩박스 좌표 가져오기

bboxes = meta_data[['XMin', 'XMax', 'YMin', 'YMax']].values

#이미지의 높이, 너비 값 가져오기

img_H, img_W, _ = image.shape

# 라벨 이름을 id값으로 불러오기

class_ids = [CLASS_NAME_TO_ID[cate_name] for cate_name in cate_names]

# 바운딩박스 좌표를 center값으로 바꾸기

unnorm_bboxes = bboxes.copy()

unnorm_bboxes[:, [1,2]] = unnorm_bboxes[:, [2,1]]

unnorm_bboxes[:, 2:4] -= unnorm_bboxes[:, 0:2]

unnorm_bboxes[:, 0:2] += (unnorm_bboxes[:, 2:4]/2)

# 너비와 높이로 설정하기

unnorm_bboxes[:, [0, 2]] *= img_W

unnorm_bboxes[:, [1, 3]] *= img_H

canvas = util.visualize(image, unnorm_bboxes, class_ids)

plt.figure(figsize=(8,6))

plt.imshow(canvas)7. 데이터셋 클래스화

class Detection_dataset():

def __init__(self, data_dir, phase, transformer=None):

self.data_dir = data_dir

self.phase = phase

self.data_df = pd.read_csv(os.path.join(self.data_dir, 'df.csv'))

self.image_files = [fn for fn in os.listdir(os.path.join(self.data_dir, phase)) if fn.endswith('jpg')]

self.transformer = transformer

def __len__(self):

return len(self.image_files)

def __getitem__(self, index):

filename, image = self.get_image(index)

# 바운딩박스가 그려진 이미지 객체

bboxes, class_ids = self.get_label(filename)

img_H, img_W, _ = image.shape

if self.transformer:

image = self.transformer(image)

_, img_H, img_W = image.shape # transformer를 거쳐 사이즈가 바뀔수 있기 때문

bboxes[:, [0, 2]] *= img_W

bboxes[:, [1, 3]] *= img_H

target = {} # 딕셔너리 객체

target['boxes'] = torch.Tensor(bboxes).float() # 바운딩박스 좌표를 tensor float형으로

target['labels'] = torch.Tensor(class_ids).long() # 클래스 라벨을 tensor 정수형으로

# 이미지 객체, 라벨, 파일이름 3개를 리턴

return image, target, filename

def get_image(self, index):

filename = self.image_files[index]

image_path = os.path.join(self.data_dir, self.phase, filename) # './DataSet/train/filename'

image = cv2.imread(image_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

return filename, image

def get_label(self, filename):

image_id = filename.split('.')[0]

meta_data = data_df[data_df['ImageID'] == image_id]

cate_names = meta_data['LabelName'].values

class_ids = [CLASS_NAME_TO_ID[cate_name] for cate_name in cate_names]

bboxes = meta_data[['XMin', 'XMax', 'YMin', 'YMax']].values

bboxes[:, [1, 2]] = bboxes[:, [2, 1]] # 'XMin', 'YMin', 'XMax', 'YMax'

return bboxes, class_ids

✅ 트랜스포머 적용 안한 데이터셋 생성

data_dir = './DataSet/'

dataset = Detection_dataset(data_dir=data_dir, phase='train', transformer=None)

dataset[0]

✅ index 20번째 데이터셋 보기

index=20

image, target, filename = dataset[index]

target, filenameboxes = target['boxes'].numpy()

class_ids = target['labels'].numpy()

# 이미지에서 개체 수(2, 4)

n_obj = boxes.shape[0]

# (2,4)의 0으로 채운 bboxes를 생성

bboxes = np.zeros(shape=(n_obj, 4), dtype=np.float32)

bboxes[:, 0:2] = (boxes[:,0:2] + boxes[:, 2:4]) /2 # 센터 좌표로 설정

bboxes[:, 2:4] = (boxes[:,2:4] - boxes[:, 0:2]) # 너비와 길이

canvas = util.visualize(image, bboxes, class_ids)

plt.figure(figsize=(6,6))

plt.imshow(canvas)

plt.show()

✅ interact를 이용하여 데이터셋 시각화

@interact(index=(0, len(image_files)-1))

def show_sample(index=0):

image, target, filename = dataset[index]

boxes = target['boxes'].numpy()

class_ids = target['labels'].numpy()

n_obj = boxes.shape[0]

bboxes = np.zeros(shape=(n_obj, 4), dtype=np.float32)

bboxes[:, 0:2] = (boxes[:, 0:2] + boxes[:, 2:4]) / 2

bboxes[:, 2:4] = boxes[:, 2:4] - boxes[:, 0:2]

canvas = util.visualize(image, bboxes, class_ids)

plt.figure(figsize=(6, 6))

plt.imshow(canvas)

plt.show()

8. transformer 생성

IMAGE_SIZE = 448

# [0.485, 0.456, 0.406], [0.229, 0.224, 0.225]

# FASTER R-CNN에서 ImageNet으로 Pretrain을 하는데 이 값(평균, 표준편차)을 넣어 정규화를 했을때 성능이 가장 좋다라는 논문에서 참조

transformer = transforms.Compose([

transforms.ToTensor(),

transforms.Resize(size=(IMAGE_SIZE, IMAGE_SIZE)),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

✅ transformer를 적용하여 학습 데이터셋 생성

transformed_dataset = Detection_dataset(data_dir=data_dir, phase='train', transformer=transformer)

index = 0

image, target, filename = transformed_dataset[index]

image.shape

>>> torch.Size([3, 448, 448])

✅ bounding box 시각화

boxes = target['boxes'].numpy()

class_ids = target['labels'].numpy()

n_obj = boxes.shape[0]

bboxes = np.zeros(shape=(n_obj, 4), dtype=np.float32)

bboxes[:, 0:2] = (boxes[:,0:2] + boxes[:, 2:4]) /2 # 센터 좌표로 설정

bboxes[:, 2:4] = (boxes[:,2:4] - boxes[:, 0:2]) # 너비와 길이

canvas = util.visualize(np_image, bboxes, class_ids)

plt.figure(figsize=(6,6))

plt.imshow(canvas)

plt.show()

9. 데이터로더 생성

8-1. collate function

- 데이터로더에서 배치사이즈만큼 가져와 처리할 때, 배치사이즈만큼 나누어진 다음에 데이터를 가져와 처리하는 함수(처리할게 있을 경우)

- 여러 개의 데이터 샘플(예: 텍스트, 이미지, 숫자 등)을 묶어서 하나의 데이터 배치(batch)로 만들어주는 역할

def collate_fn(batch):

image_list = []

target_list = []

filename_list = []

for img, target, filename in batch:

image_list.append(img)

target_list.append(target)

filename_list.append(filename)

return image_list, target_list, filename

def build_dataloader(data_dir, batch_size=4, image_size=448):

transformer = transforms.Compose([

transforms.ToTensor(),

transforms.Resize(size=(IMAGE_SIZE, IMAGE_SIZE)),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

dataloaders = {}

train_dataset = Detection_dataset(data_dir=data_dir, phase='train', transformer=transformer)

dataloaders['train'] = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, collate_fn=collate_fn)

val_dataset = Detection_dataset(data_dir=data_dir, phase='val', transformer=transformer)

dataloaders['val'] = DataLoader(val_dataset, batch_size=1, shuffle=False, collate_fn=collate_fn)

return dataloaders

data_dir = './DataSet/'

dloaders = build_dataloader(data_dir)

# 학습데이터와 검증데이터를 별도로 생성

for phase in ['train', 'val']:

for index, batch in enumerate(dloaders[phase]):

images = batch[0]

targets = batch[1]

filenames = batch[2]

print(targets)

# 하나만 예시로 찍어보기 위해

if index==0 :

break

print()

10. 모델 불러오기

model = models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

modelFasterRCNN(

(transform): GeneralizedRCNNTransform(

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

Resize(min_size=(800,), max_size=1333, mode='bilinear')

)

(backbone): BackboneWithFPN(

(body): IntermediateLayerGetter(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): FrozenBatchNorm2d(256, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(512, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(1024, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(2048, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

)

)

(fpn): FeaturePyramidNetwork(

(inner_blocks): ModuleList(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): Conv2dNormActivation(

(0): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): Conv2dNormActivation(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

(3): Conv2dNormActivation(

(0): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer_blocks): ModuleList(

(0-3): 4 x Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(extra_blocks): LastLevelMaxPool()

)

)

(rpn): RegionProposalNetwork(

(anchor_generator): AnchorGenerator()

(head): RPNHead(

(conv): Sequential(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

)

)

(cls_logits): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1))

(bbox_pred): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1))

)

)

(roi_heads): RoIHeads(

(box_roi_pool): MultiScaleRoIAlign(featmap_names=['0', '1', '2', '3'], output_size=(7, 7), sampling_ratio=2)

(box_head): TwoMLPHead(

(fc6): Linear(in_features=12544, out_features=1024, bias=True)

(fc7): Linear(in_features=1024, out_features=1024, bias=True)

)

(box_predictor): FastRCNNPredictor(

(cls_score): Linear(in_features=1024, out_features=91, bias=True)

(bbox_pred): Linear(in_features=1024, out_features=364, bias=True)

)

)

)

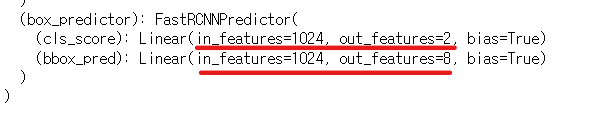

✅ in_features의 개수를 수정해야함!

def build_model(num_classes):

model = models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

in_features = model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes) # num_classes 만큼 outputs를 수정

return model

NUM_CLASSES = 2

model = build_model(num_classes=NUM_CLASSES)

model

FasterRCNN(

(transform): GeneralizedRCNNTransform(

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

Resize(min_size=(800,), max_size=1333, mode='bilinear')

)

(backbone): BackboneWithFPN(

(body): IntermediateLayerGetter(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): FrozenBatchNorm2d(256, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(512, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(1024, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(2048, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

)

)

(fpn): FeaturePyramidNetwork(

(inner_blocks): ModuleList(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): Conv2dNormActivation(

(0): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): Conv2dNormActivation(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

(3): Conv2dNormActivation(

(0): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer_blocks): ModuleList(

(0-3): 4 x Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(extra_blocks): LastLevelMaxPool()

)

)

(rpn): RegionProposalNetwork(

(anchor_generator): AnchorGenerator()

(head): RPNHead(

(conv): Sequential(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

)

)

(cls_logits): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1))

(bbox_pred): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1))

)

)

(roi_heads): RoIHeads(

(box_roi_pool): MultiScaleRoIAlign(featmap_names=['0', '1', '2', '3'], output_size=(7, 7), sampling_ratio=2)

(box_head): TwoMLPHead(

(fc6): Linear(in_features=12544, out_features=1024, bias=True)

(fc7): Linear(in_features=1024, out_features=1024, bias=True)

)

(box_predictor): FastRCNNPredictor(

(cls_score): Linear(in_features=1024, out_features=2, bias=True)

(bbox_pred): Linear(in_features=1024, out_features=8, bias=True)

)

)

)

11. 모델 학습

11-1. Two-Stage의 loss

# 클래스를 구분하는 스테이지(오브젝트가 어떤 클래스로 분류되는지 예측하는 텐서값)

loss_classifier: 예측된 오브젝트가 어떤 클래스인지 예측

loss_box_reg: 여러개의 바운딩박스 중 오브젝트가 있을법한 곳을 예측

# 오브젝트가 존재하는지 여부 스테이지(실제 오브젝트가 존재하는지 예측해주는 텐서값)

loss_objectness: 오브젝트가 있었는지 없었는지에 대한 존재 여부 예측

loss_rpn_box_reg: 오브젝트가 있을법한 곳을 예측

phase = 'train'

model.train() # gradient descent에 대해 메모리에 몰라가게됨

for index, batch in enumerate(dloaders[phase]):

images = batch[0]

targets = batch[1]

filenames = batch[2]

images = list(image for image in images)

targets = [{k: v for k, v in t.items()} for t in targets] # 여러개의 딕셔너리를 리스트로

loss = model(images, targets)

if index ==0:

break

print(loss)

{'loss_classifier': tensor(0.5043, grad_fn=<NllLossBackward0>),

'loss_box_reg': tensor(0.1005, grad_fn=<DivBackward0>),

'loss_objectness': tensor(0.2137, grad_fn=<BinaryCrossEntropyWithLogitsBackward0>),

'loss_rpn_box_reg': tensor(0.0123, grad_fn=<DivBackward0>)}✅ 모델 학습 함수 정의

def train_one_epoch(dataloaders, model, optimizer, device):

train_loss = defaultdict(float) # float형으로 dtype을 초기화시켜주는 dictionary

val_loss = defaultdict(float)

model.train()

for phase in ['train', 'val']:

for index, batch in enumerate(dloaders[phase]):

images = batch[0]

targets = batch[1]

filenames = batch[2]

images = list(image for image in images)

targets = [{k: v for k, v in t.items()} for t in targets] # 여러개의 딕셔너리를 리스트로

with torch.set_grad_enabled(phase == 'train'): # set_grad_enabled: phase가 train일 때만 gradient 작동

loss = model(images, targets)

total_loss = sum(each_loss for each_loss in loss.values())

if phase == 'train':

optimizer.zero_grad()

total_loss.backward()

optimizer.step()

# VERBOSE_FREQ:몇 번째 마다 print로 출력해서 볼지에 대한 상수값

if (index > 0) and (index % VERBOSE_FREQ == 0):

text = f"{index}/{len(dataloaders[phase])} - "

for k, v in loss.items():

text += f'{k}: {v.item():.4f}'

print(text)

for k, v in loss.items():

train_loss[k] += v.item()

train_loss['total_loss'] += total_loss.item()

# val 모드

else:

for k, v in loss.item():

val_loss[k] += v.item()

val_loss['total_loss'] += total_loss.item()

for k in train_loss.keys():

train_loss[k] /= len(dataloaders['train']) # 데이터의 개수만큼으로 나누어줌

val_loss[k] /= len(dataloaders['val'])

✅ 변수 모아서 정의

data_dir = './DataSet/'

is_cuda = False

NUM_CLASSES = 2

IMAGE_SIZE = 448

BATCH_SIZE = 8

VERBOSE_FREQ = 100

DEVICE = torch.device('cuda' if torch.cuda.is_available and is_cuda else 'cpu')

dataloaders = build_dataloader(data_dir=data_dir, batch_size=BATCH_SIZE, image_size=IMAGE_SIZE)

model = build_model(num_classes=NUM_CLASSES)

model = model.to(DEVICE)

optimizer = torch.optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

✅ 모델 학습

num_epochs = 30

train_losses = []

val_losses = []

for epoch in range(num_epochs):

train_loss, val_loss =train_one_epoch(dataloaders, model, optimizer, DEVICE)

train_losses.append(train_loss)

val_losses.append(val_loss)

print(f"epoch:{epoch+1}/{num_epochs} - Train Loss: {train_loss['total_loss']: .4f}, Val Loss: {val_loss['total_loss']:.4f}")

if (epoch+1) % 10 == 0:

util.save_model(model.stat_dict(), f'model_{epoch+1}.pth')

✅ 모델 학습 결과 시각화

tr_loss_classifier = []

tr_loss_box_reg = []

tr_loss_objectness = []

tr_loss_rpn_box_reg = []

tr_loss_total = []

for tr_loss in train_losses:

tr_loss_classifier.append(tr_loss['loss_classifier'])

tr_loss_box_reg.append(tr_loss['loss_box_reg'])

tr_loss_objectness.append(tr_loss['loss_objectness'])

tr_loss_rpn_box_reg.append(tr_loss['loss_rpn_box_reg'])

tr_loss_total.append(tr_loss['total_loss'])

val_loss_classifier = []

val_loss_box_reg = []

val_loss_objectness = []

val_loss_rpn_box_reg = []

val_loss_total = []

for vl_loss in val_losses:

val_loss_classifier.append(vl_loss['loss_classifier'])

val_loss_box_reg.append(vl_loss['loss_box_reg'])

val_loss_objectness.append(vl_loss['loss_objectness'])

val_loss_rpn_box_reg.append(vl_loss['loss_rpn_box_reg'])

val_loss_total.append(vl_loss['total_loss'])

plt.figure(figsize=(8, 4))

plt.plot(tr_loss_total, label="train_total_loss")

plt.plot(tr_loss_classifier, label="train_loss_classifier")

plt.plot(tr_loss_box_reg, label="train_loss_box_reg")

plt.plot(tr_loss_objectness, label="train_loss_objectness")

plt.plot(tr_loss_rpn_box_reg, label="train_loss_rpn_box_reg")

plt.plot(val_loss_total, label="train_total_loss")

plt.plot(val_loss_classifier, label="val_loss_classifier")

plt.plot(val_loss_box_reg, label="val_loss_box_reg")

plt.plot(val_loss_objectness, label="val_loss_objectness")

plt.plot(val_loss_rpn_box_reg, label="val_loss_rpn_box_reg")

plt.xlabel("epoch")

plt.ylabel("loss")

plt.grid("on")

plt.legend(loc='upper right')

plt.tight_layout()12. 학습 후 테스트

✅ 베스트 모델 가중치 파일 가져오기

def load_model(ckpt_path, num_classes, device):

checkpoint = torch.load(ckpt_path, map_location=device)

model = build_model(num_classes=num_classes)

model.load_state_dict(checkpoint)

model = model.to(device)

model.eval() # test 모드

return model

model = load_model(ckpt_path='./model_30.pth', num_classes=NUM_CLASSES, device=DEVICE)

modelFasterRCNN(

(transform): GeneralizedRCNNTransform(

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

Resize(min_size=(800,), max_size=1333, mode='bilinear')

)

(backbone): BackboneWithFPN(

(body): IntermediateLayerGetter(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): FrozenBatchNorm2d(256, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(512, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(1024, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(2048, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

)

)

(fpn): FeaturePyramidNetwork(

(inner_blocks): ModuleList(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): Conv2dNormActivation(

(0): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): Conv2dNormActivation(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

(3): Conv2dNormActivation(

(0): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer_blocks): ModuleList(

(0-3): 4 x Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(extra_blocks): LastLevelMaxPool()

)

)

(rpn): RegionProposalNetwork(

(anchor_generator): AnchorGenerator()

(head): RPNHead(

(conv): Sequential(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

)

)

(cls_logits): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1))

(bbox_pred): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1))

)

)

(roi_heads): RoIHeads(

(box_roi_pool): MultiScaleRoIAlign(featmap_names=['0', '1', '2', '3'], output_size=(7, 7), sampling_ratio=2)

(box_head): TwoMLPHead(

(fc6): Linear(in_features=12544, out_features=1024, bias=True)

(fc7): Linear(in_features=1024, out_features=1024, bias=True)

)

(box_predictor): FastRCNNPredictor(

(cls_score): Linear(in_features=1024, out_features=2, bias=True)

(bbox_pred): Linear(in_features=1024, out_features=8, bias=True)

)

)

)12-1. Confidence Threshold

- 객체 탐지와 같은 작업에서 사용되는 개념

- 객체 탐지 모델은 입력 이미지에서 객체의 위치를 찾아내는 작업을 수행 -> 모델은 주어진 이미지 내에서 다양한 위치에 대해 객체가 존재하는지 예측하고 각 객체에 대한 바운딩 박스와 해당 객체에 대한 신뢰도(confidence score)를 출력

- 신뢰도를 조절하는 기준값

- 예) Confidence Threshold 을 0.8로 설정하면 모델은 신뢰도가 0.8 이상인 객체만을 선택하게 됨

- Confidence Threshold를 적절하게 설정해야 객체탐지의 정확도를 높일 수 있음

- 너무 낮은 Confidence Threshold를 설정하면 신뢰성이 낮은 결과를 포함

- 너무 높은 Confidence Threshold를 설정하면 신뢰성이 높은 객체 조차 누락

12-2. Non-Maximum Suppression(NMS)

- 중복된 결과를 제거하여 정확하고 겹치지 않는 객체를 식별하는 데 사용

- NMS가 작동되는 순서

- 객체 탐지 모델을 실행(이미지를 입력 받아 바운딩 박스와 신뢰도를 출력)

- 바운딩 박스 필터링(겹치는 바운딩 박스들 중에서 가장 확실한 바운딩 박스만 남기고 나머지 겹치는 바운딩 박스를 제거, IoU 지표를 사용)

12-3. IoU(Intersection over Union)

- 객체 탐지나 세그멘테이션과 같은 컴퓨터 비전 작업에서 모델이 예측한 결과와 실제 라벨 사이의 정확도를 측정하는 지표

- 바운딩 박스나 세그멘테이션 마스크가 얼마나 겹치는지를 측정하여, 예측 결과의 정확성을 평가하는데 사용

- 0과 1 사이의 값으로 1에 가까울수록 예측 결과가 정확하고 겹치는 영역이 많다는 것을 의미

- IoU 계산 방법

- 영역 A와 영역 B의 겹치는 영역 계산, 공통 부분을 계산

- 교집합 계산(얼마나 겹쳐져 있는지)

- 합집합 계산(두 영역의 전체 크기)

- IoU계산(교집합을 합집합으로 나눔. 교집합/합집합)

- 객체에서 IoU 값이 가장 큰 바운딩 박스가 객체를 나타내는 바운딩 박스일 가능성이 큼

✅ 특정 IoU 임계치 이상의 객체만 남겨서 시각화하는 함수 정의

def postprocess(prediction, conf_thres=0.3, IoU_threshold=0.2): # pth파일이 최적성능이 아니기 때문에 임계치를 낮게 잡음

pred_box = prediction['boxes'].cpu().detach().numpy() # 바운딩박스

pred_label = prediction['labels'].cpu().detach().numpy()

pred_conf = prediction['scores'].cpu().detach().numpy()

# NMS에서 해주는 작업이긴하지만, 잔챙이들을 먼저 한번 걸러내는 것

valid_index = pred_conf > conf_thres # 임계값보다 높은 점수들의 인덱스만 걸러냄

pred_box = pred_box[valid_index]

pred_label = pred_label[valid_index]

pred_conf = pred_conf[valid_index]

valid_index = nms(torch.tensor(pred_box.astype(np.float32)), torch.tensor(pred_conf), IoU_threshold)

pred_box = pred_box[valid_index.numpy()]

pred_label = pred_label[valid_index.numpy()]

pred_conf = pred_conf[valid_index.numpy()]

# pred_box는 꼭지점 4개의 값이 나오기 때문에 합칠 때 pred_conf와 pred_label의 새로운 열을 만들어 형태를 맞추어줌

return np.concatenate((pred_box, pred_conf[:, np.newaxis], pred_label[:, np.newaxis]), axis=1)

✅ 검증 데이터로 객체 탐지 예측

pred_images = []

pred_labels = []

for index, (images, _, filenames) in enumerate(dataloaders['val']): # target은 사용안함

images = list(image.to(DEVICE) for image in images)

filename = filenames[0]

image = make_grid(images[0].cpu().detach(), normalize=True).permute(1, 2, 0).numpy()

image = (image * 255).astype(np.uint8) # 정규화되어있는 값을 255를 곱해서 원하는 픽셀값으로 변환

with torch.no_grad():

prediction = model(images)

prediction = postprocess(prediction[0])

prediction[:, 2].clip(min=0, max=image.shape[1])

prediction[:, 3].clip(min=0, max=image.shape[0])

xc = (prediction[:,0] + prediction[:, 2]) / 2

yc = (prediction[:,1] + prediction[:, 3]) / 2

w = prediction[:, 2] - prediction[:, 0]

h = prediction[:, 3] - prediction[:, 1]

cls_id = prediction[:, 5]

# stack(): 세로로 값들을 쌓음

prediction_yolo = np.stack([xc, yc, w, h, cls_id], axis=1)

pred_images.append(image)

pred_labels.append(prediction_yolo)

if index==2:

break

pred_labels

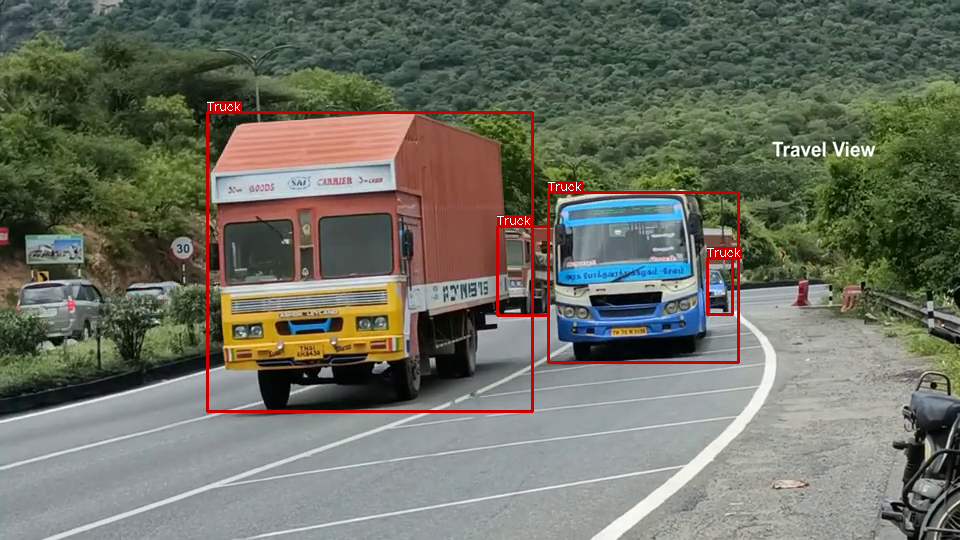

✅ interact로 결과 시각화

@interact(index=(0, len(pred_images)-1))

def show_result(index=0):

result = visualize(pred_images[index], pred_labels[index][:, 0:4], pred_labels[index][:, 4])

plt.figure(figsize=(6, 6))

plt.imshow(result)

plt.show()

✅ 'sample_video.mp4'로 실시간 객체 탐지 확인하기

video_path = './sample_video.mp4'

@torch.no_grad()

def model_predict(image, model):

tensor_image = transformer(image)

tensor_image = tensor_image.to(DEVICE)

prediction = model([tensor_image])

return prediction

video = cv2.VideoCapture(video_path)

while(video.isOpened()):

ret, frame = video.read()

if ret:

ori_h, ori_w = frame.shape[:2]

image = cv2.resize(frame, dsize=(IMAGE_SIZE, IMAGE_SIZE))

prediction = model_predict(image, model)

prediction = postprocess(prediction[0])

prediction[:, [0, 2]] *= (ori_w / IMAGE_SIZE)

prediction[:, [1, 3]] *= (ori_h / IMAGE_SIZE)

prediction[:, 2].clip(min=0, max=image.shape[1])

prediction[:, 3].clip(min=0, max=image.shape[0])

xc = (prediction[:, 0] + prediction[:, 2]) / 2

yc = (prediction[:, 1] + prediction[:, 3]) / 2

w = prediction[:, 2] - prediction[:, 0]

h = prediction[:, 3] - prediction[:, 1]

cls_id = prediction[:, 5]

prediction_yolo = np.stack([xc, yc, w, h, cls_id], axis=1)

canvas = visualize(frame, prediction_yolo[:, 0:4], prediction_yolo[:, 4])

cv2.imshow('camera', canvas)

key = cv2.waitKey(1000)

if key == 27:

break

if key == ord('s'):

cv2.waitKey()

video.release()

728x90

반응형

LIST

'Python > Computer Vision' 카테고리의 다른 글

| [파이썬, Python] Computer Vision - Object Detection(객체 탐지) (0) | 2023.09.04 |

|---|---|

| [파이썬, Python] Computer Vision - Classification 실습 (0) | 2023.09.04 |

| [파이썬, Python] Computer Vision - 분류(Classification), CNN과 CNN의 변천 (0) | 2023.09.04 |

| [파이썬, Python] 컴퓨터 비전(Computer Vision)의 데이터셋 (0) | 2023.09.04 |

| [파이썬, Python] OpenCV - 레이블링(labeling)과 외곽선 검출 (0) | 2023.09.02 |