728x90

반응형

SMALL

📍 픽사베이 - 무료 이미지 제공

1. 이미지 수집하기

import chromedriver_autoinstaller

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from urllib.request import Request, urlopen

driver = webdriver.Chrome()

driver.implicitly_wait(3)

url = 'https://pixabay.com/ko/images/search/cat'

driver.get(url)

time.sleep(3)

image_xpath = '/html/body/div[1]/div[1]/div/div[2]/div[3]/div/div/div[2]/div[1]/div/a/img'

image_url = driver.find_element(By.XPATH, image_xpath).get_attribute('src')

print('image_url',image_url)image_byte = Request(image_url, headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'})

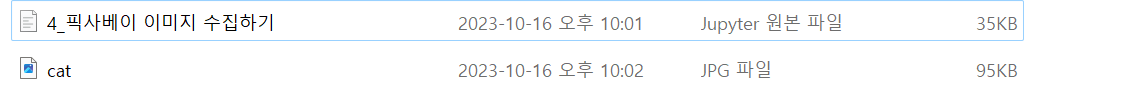

f = open('cat.jpg', 'wb')

f.write(urlopen(image_byte).read())

f.close()

2. 이미지 여러개 수집하기

driver = webdriver.Chrome()

driver.implicitly_wait(3)

url = 'https://pixabay.com/ko/images/search/cat'

driver.get(url)

time.sleep(3)

image_urls = []

image_area_xpath = '/html/body/div[1]/div[1]/div/div[2]/div[3]'

image_area = driver.find_element(By.XPATH, image_area_xpath)

image_elements = image_area.find_elements(By.TAG_NAME, 'img') # img태그를 전체 다 찾음

# print(image_elements)

for image_element in image_elements:

image_url = image_element.get_attribute('data-lazy-src')

if image_element.get_attribute('data-lazy-src') is None:

image_url = image_element.get_attribute('src')

print(image_url)

image_urls.append(image_url)for i in range(len(image_urls)):

image_url = image_urls[i]

image_byte = Request(image_url, headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'})

f = open(f'cat{i}.jpg','wb')

f.write(urlopen(image_byte).read())

f.close()

3. 함수로 리팩토링

- crawl_image(keyword, pages)

import os

def crawl_image(keyword):

url = f'https://pixabay.com/ko/images/search/{keyword}'

driver.get(url)

time.sleep(3)

image_urls = []

image_area_xpath = '/html/body/div[1]/div[1]/div/div[2]/div[3]'

image_area = driver.find_element(By.XPATH, image_area_xpath)

image_elements = image_area.find_elements(By.TAG_NAME, 'img') # img태그를 전체 다 찾음

# print(image_elements)

for image_element in image_elements:

image_url = image_element.get_attribute('data-lazy-src')

if image_element.get_attribute('data-lazy-src') is None:

image_url = image_element.get_attribute('src')

print(image_url)

image_urls.append(image_url)

# 현재 작업 디렉토리를 기준으로 상대 경로 생성

folder_name = f'{keyword}'

relative_path = os.path.join(os.getcwd(), folder_name)

os.makedirs(relative_path, exist_ok=True)

for i in range(len(image_urls)):

image_url = image_urls[i]

image_byte = Request(image_url, headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'})

# f = open(f'{keyword}{i}.jpg','wb')

file_path = os.path.join(relative_path, f'{keyword}{i}.jpg')

with open(file_path, 'wb') as f:

f.write(urlopen(image_byte).read())crawl_image('kitty')

def crawl_and_save_image(keyword, pages):

image_urls = crawl_image(keyword, pages)

if not os.path.exists(keyword):

os.mkdir(keyword)

for i in range(len(image_urls)):

image_url = image_urls[i]

image_byte = Request(image_url, headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'})

filename = image_url.split('/')[-1]

f = open(f'{keyword}/{filename}', 'wb')

f.write(urlopen(image_byte).read())

f.close()driver = webdriver.Chrome()

driver.implicitly_wait(3)

crawl_and_save_image('kitten',2)

728x90

반응형

LIST

'Python > Crawlling' 카테고리의 다른 글

| [파이썬, Python] 셀레니움으로 인스타그램 크롤링하기! (0) | 2023.09.05 |

|---|---|

| [파이썬, Python] 셀레니움(Selenium) 라이브러리를 활용한 브라우저 컨트롤링 (0) | 2023.09.05 |

| [파이썬, Python] 크롤링(Crawlling)의 정의 & 크롤링 실습하기! (0) | 2023.09.04 |